YouTube creators will soon have to disclose use of gen AI in videos or risk suspension

- Restrictions expand on rules the site’s parent company, Google, unveiled in September requiring that political ads on their platforms using AI come with a warning label

- Site is also using the tech to root out content that breaks its rules

YouTube has expanded its regulations for the use and labeling of generative AI on its site. Photo: Shutterstock

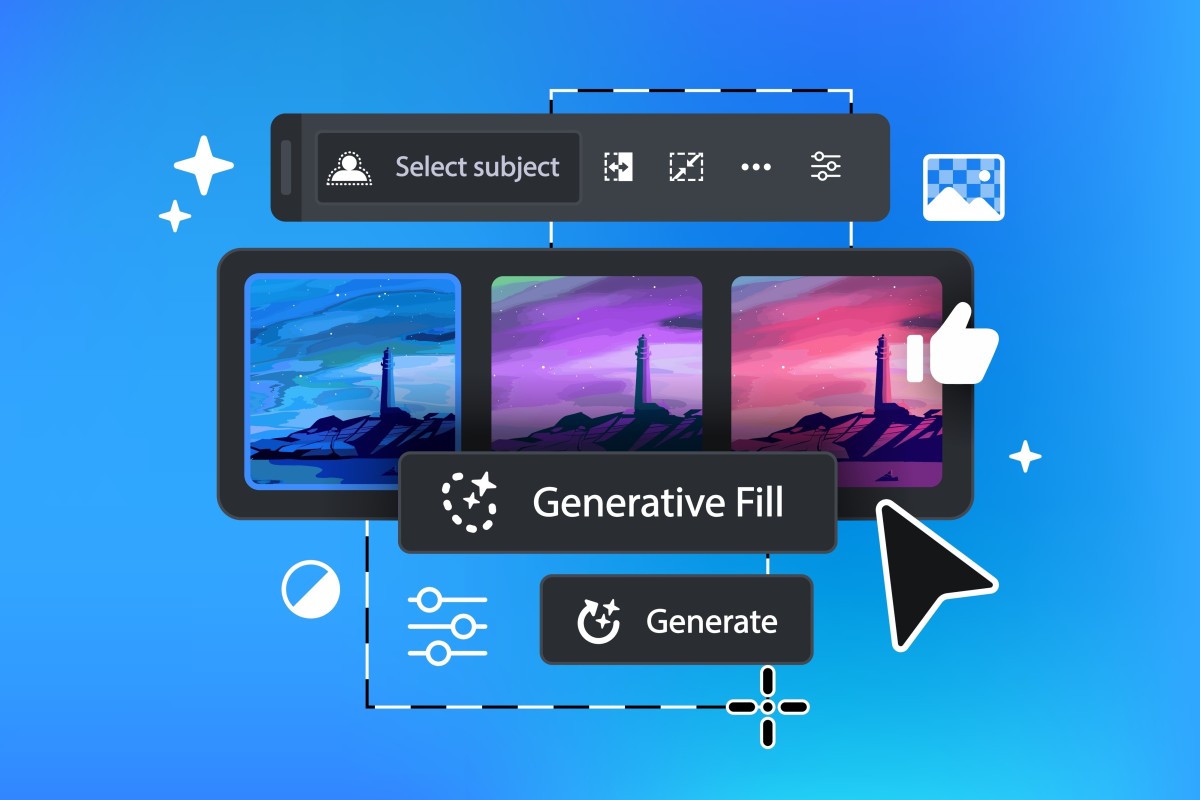

YouTube has expanded its regulations for the use and labeling of generative AI on its site. Photo: ShutterstockYouTube is rolling out new rules for AI content, including a requirement that creators reveal whether they’ve used generative artificial intelligence to make realistic looking videos.

In a blog post earlier this week outlining a number of AI-related policy updates, YouTube said creators that don’t disclose whether they’ve used AI tools to make “altered or synthetic” videos face penalties including having their content removed or suspension from the platform’s revenue sharing programme.

“Generative AI has the potential to unlock creativity on YouTube and transform the experience for viewers and creators on our platform,” Jennifer Flannery O’Connor and Emily Moxley, vice presidents for product management, wrote in the blog post. “But just as important, these opportunities must be balanced with our responsibility to protect the YouTube community.”

AI puts the heat on the publishing industry as low-quality computer-written books flood the market

The restrictions expand on rules that YouTube’s parent company, Google, unveiled in September requiring that political ads on YouTube and other Google platforms using artificial intelligence come with a prominent warning label.

Under the latest changes, which will take effect by next year, YouTubers will get new options to indicate whether they’re posting AI-generated video that, for example, realistically depict an event that never happened or show someone saying or doing something they didn’t actually do.

“This is especially important in cases where the content discusses sensitive topics, such as elections, ongoing conflicts and public health crises, or public officials,” O’Connor and Moxley said.

Viewers will be alerted to altered videos with labels, including prominent ones on the YouTube video player for sensitive topics.

The platform is also deploying AI to root out content that breaks its rules, and the company said the technology has helped detect “novel forms of abuse” more quickly.

Unesco seeks regulation in first guidance on generative AI in education

YouTube’s privacy complaint process will be updated to allow requests for the removal of an AI-generated video that simulates an identifiable person, including their face or voice.

YouTube music partners such as record labels or distributors will be able to request the takedown of AI-generated music content “that mimics an artist’s unique singing or rapping voice.”