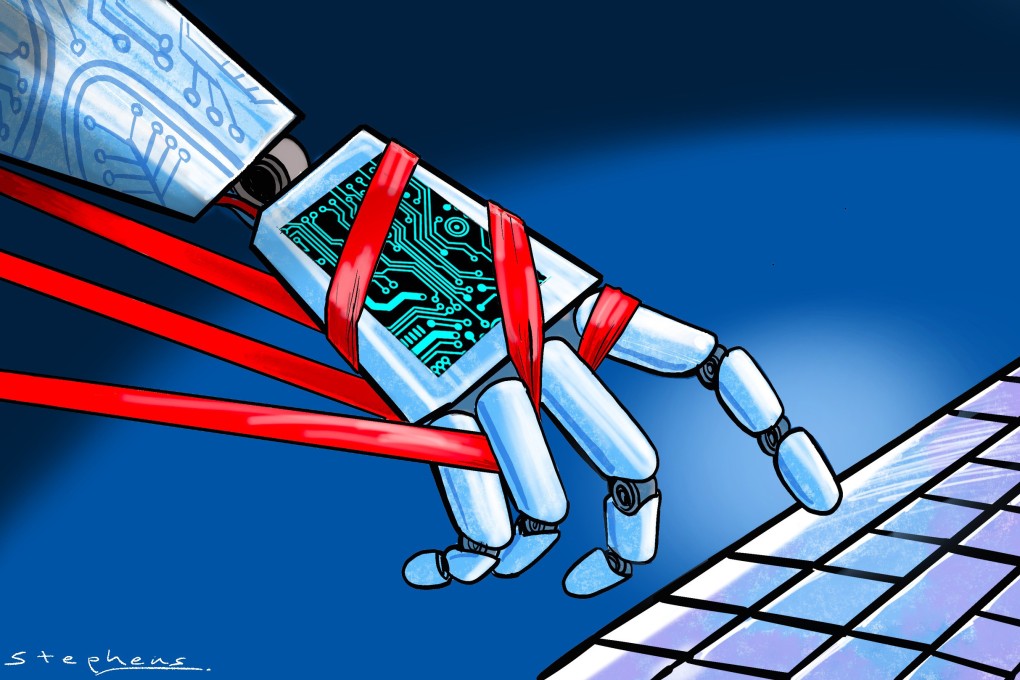

Opinion | Growing threat of AI misuse makes the need for effective, targeted regulation all the more urgent

- The dangers from using AI to spread misinformation, deepfakes and child sexual abuse material will only get worse without meaningful regulation

- Some frameworks have been proposed, but they do not include ways to hold AI developers accountable for the misuse of their systems

Artificial intelligence is a general, highly capable dual-use technology. It is therefore open to general, highly destructive misuse.

Maybe you think it’s not regulators’ job to prevent people from forming false beliefs. But setting aside epistemic concerns, the opportunities for misuse are even more troubling. An infinite quantity of child sexual abuse material can now be generated at near-zero marginal cost. Worse, such AI-generated material is immune to state-of-the-art detection and prevention strategies. Law enforcement has no plan, and technical experts are at a loss.

Without meaningful, targeted regulation, things will get worse on all fronts. The exact manner and relative seriousness of particular future misuse of large-scale, highly capable “foundation” or “base” models is difficult to predict. That is because it is difficult to predict how foundation models will improve. They will improve, though, and with those improvements will come more possibilities for, and increased likelihood of, misuse.

.jpg?itok=UhNKi7EY&v=1686019221)